Key Takeaways

AI outputs are only as good as the input we give them. AI strengths vs human strengths >

When AI makes mistakes, the scale can be massive and fast. How one company lost $2 million+ due to an undetected error >

AI should act as a first-pass filter to quickly flag potential issues. Then, auditors can step in to do more in-depth work. What should be automated and what shouldn’t >

Audit firms that overuse AI may cause you to miss out on key savings. 3 red flags to watch out for >

AI is undeniably powerful, but it can’t replace human intuition’s expertise and judgment. Let’s explore why.

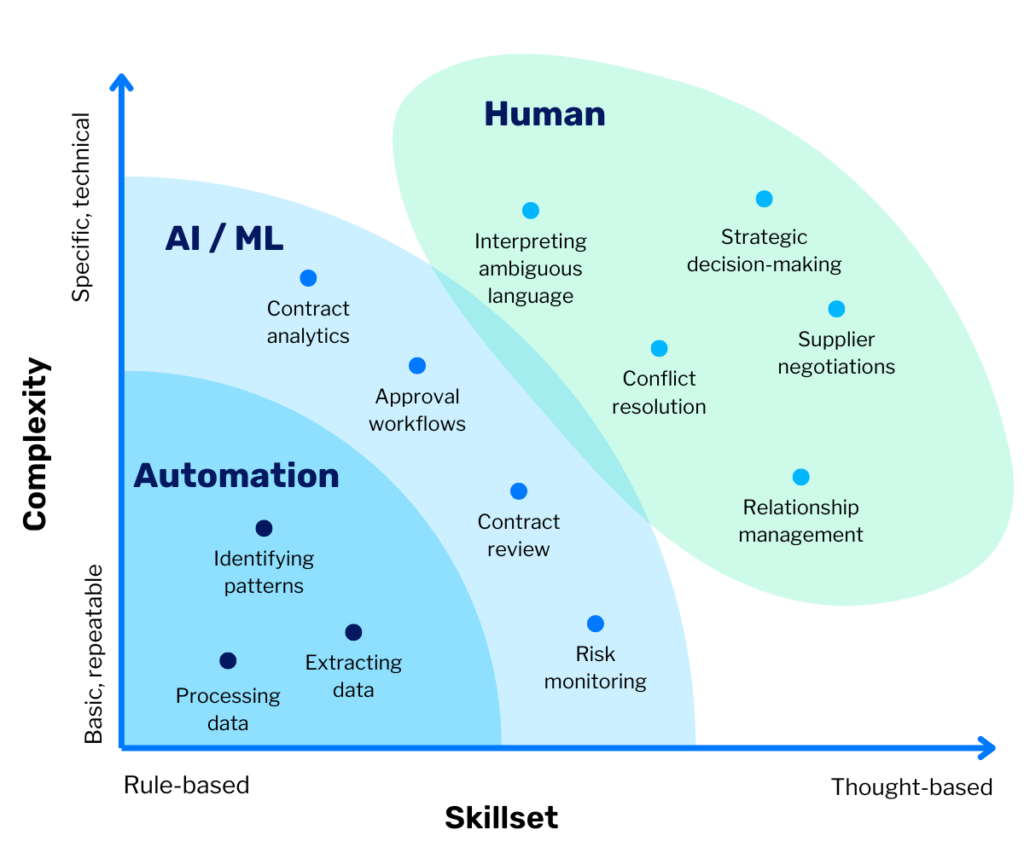

The biggest benefit of AI in contract management is its ability to repeat similar tasks. This empowers contract teams to process large amounts of data, review contracts, and visualize patterns quickly and accurately. But there’s one thing AI cannot do: AI models cannot think.

I read articles about automating contracts with AI every day. Looking deeper, you’ll find that while the technology is impressive, these tools can’t manage complex or nuanced relationships. And these complex relationships rely on third-party data inputs, where non-compliance, overbilling, or other contract issues often arise. This is where human expertise is crucial.

AI can’t replace human auditors, but it can enable more efficient and effective audit procedures. The best auditors use AI as a tool to improve their process (not a crutch). When analyzing your contracts for compliance, let’s dig into what AI can and can’t do.

AI in Contract Compliance: What It Can Do

Gartner research predicts that 50% of organizations will use AI-enabled contract risk analysis and editing tools to support contract management by 2027. This comes as no surprise, as the technology is incredibly helpful for simple, time-consuming tasks such as:

- Analyzing large volumes of contracts quickly

- Automating review processes

- Flagging potential risks and discrepancies that need a closer look

AI tools excel at spotting issues and conducting initial reviews. This is a universal sentiment throughout the industry—processes like data and clause extraction are the #1 use case for contract teams in 2024. Automating menial tasks frees up time to focus on more value-adding activities.

Many believe AI can prevent or address compliance issues, but that’s not always the case. While AI is excellent at processing data, it struggles to validate data authenticity. News articles are littered with humorous or alarming examples of AI confidently stating factually incorrect things, like Google’s AI Overview tool telling readers to eat one small rock a day, or Twitter/X’s AI bot accusing an NBA player of vandalizing homes after misinterpreting basketball lingo.

AI in Contract Compliance: What It Can’t Do

AI outputs are only as good as the input and instructions we give them, as AI is not yet equipped to verify information. Incorrect input can look like an ML tool not reading a cross-referenced contract because it wasn’t linked properly, or misinterpreting an ambiguous term that wasn’t added to its library.

This problem is similar to smart contracts on a blockchain, which rely on data oracles. If the oracle provides inaccurate information, the smart contract won’t work correctly. Similarly, contracts are at risk if the third-party data is incorrect. That’s why there’s still a clear need for human judgment to assess risk and validate contract clauses. This is where a skilled auditor’s expertise shines.

Like humans, AI isn’t perfect. When automated processes make mistakes, the scale of those mistakes can become massive, leading to significant financial losses and compromised supplier relationships. And who’s responsible for the mistake? Do we check AI’s work? (And if we do that, will it even be worth the time saved?)

Two recent examples of automation disasters our clients came to us with:

- Inaccurate algorithms: One of our clients relied on automated algorithms to calculate royalty payments. Due to a faulty data input, a royalty payment was miscalculated. The payment recipient reported the error immediately, but an effective process to correct errors did not exist. The issue remained unresolved for over a year until our team discovered and manually corrected the issue within a few days of discovery.

- Undetected programming errors: Another client agreed with a supplier to a cost-plus 20% markup for supplies. A misinformed developer set up the system to process payment based on a 20% margin, which equates to a 25% markup. The error went undetected until one of our auditors, looking at cost documentation, realized the bill rate was 25% more than the actual cost. Every single order was processed at the wrong rate for nearly two years, a multi-million-dollar mistake.

These examples highlight the human error in training algorithms and programming automated processes. AI tools also have limitations in their ability to understand language and context, including:

- Interpreting ambiguity and subjectivity: A term like “reasonable efforts” can be interpreted differently depending on the situation, while assessing performance standards often involves qualitative evaluations that AI can’t perform accurately.

- Identifying erroneous data inputs: Many processes rely on Data Oracles with limited controls to ensure the accuracy of the submitted information. For instance, a system may require a supplier to disclose costs, but no evidence is required to verify the cost reported.

- Reading industry-specific language or contractually defined terms: AI may not accurately interpret an acronym for a new regulation and miss critical requirements. Contracts may or may not precisely quantify a term such as “cost.” Is cost an amount invoiced by a third party, or is it the amount paid to a third party after discounts? What about rebates?

- Navigating relationships and conflict: Technology also cannot replace the contextual understanding necessary to interpret non-compliance, or the emotional and social intelligence needed to navigate resolutions with suppliers.

No matter how powerful and useful technology can be, it still has weaknesses. AI is simply a tool to augment human skills, not replace them.

Balancing AI and Human Expertise in Contract Compliance Audits

The best auditors leverage AI for repeatable tasks like data entry and processing, freeing up their time to focus on higher-value activities like relationship management, conflict resolution, and supplier negotiations that can’t be replicated with technology (yet). Understanding when to use or not to use technology is what separates good auditors from great ones.

AI should act as a first-pass filter to quickly flag potential issues or areas that require further attention. Then, auditors can step in to do more in-depth work.

Approaching technology with caution is critical. For example, autopilot air travel is ubiquitous, but every commercial plane still relies on at least two human pilots trained to step in if needed. Humans are the fail-safe.

How to Spot AI Overuse When Selecting an Audit Firm

Contract compliance audits are best performed by an objective third-party firm, but in a tech-saturated industry, it can be hard to pinpoint when firms overuse AI and other technologies. A few red flags to watch out for include:

- Overemphasis on AI instead of auditor credentials: If the firm heavily markets its technology’s capabilities without equally highlighting its human auditors’ experience and qualifications, that’s a red flag. Contract compliance audits require experienced professionals who can interpret AI findings within the context of industry-specific knowledge.

- Limited access to experts: An effective audit process requires regular client communication and collaboration. Does the firm have in-house auditors dedicated to your team, or do they rely on offshore contractors and automated bots for client interactions? Be wary of audit firms that offer minimal opportunities to review and discuss audit findings with a human, not a bot.

- Fees based on what’s identified, not what’s recovered: Many firms with contract software can identify lots of unusual or non-compliant transactions. But what you find doesn’t matter if you can’t recover it. Like it or not, context matters. Only humans can do the complex work of incorporating context with the relevant facts and organizing them into a digestible form that supports informed decision-making. Of course, the ability to negotiate recoveries and resolutions with suppliers is what really matters, and that requires social intelligence and human experience.

Ready to engage an expert partner that balances expertise with AI technology? At SC&H, we use technology to enhance, not replace, our work for you. Our Contract Compliance Audit group is comprised of 100% full-time, US-based CPAs, CFEs, and CIAs with 30 years of experience to help you cut costs and recover millions faster. Connect with our team to learn how we can help your organization prevent margin erosion and build long-term success.